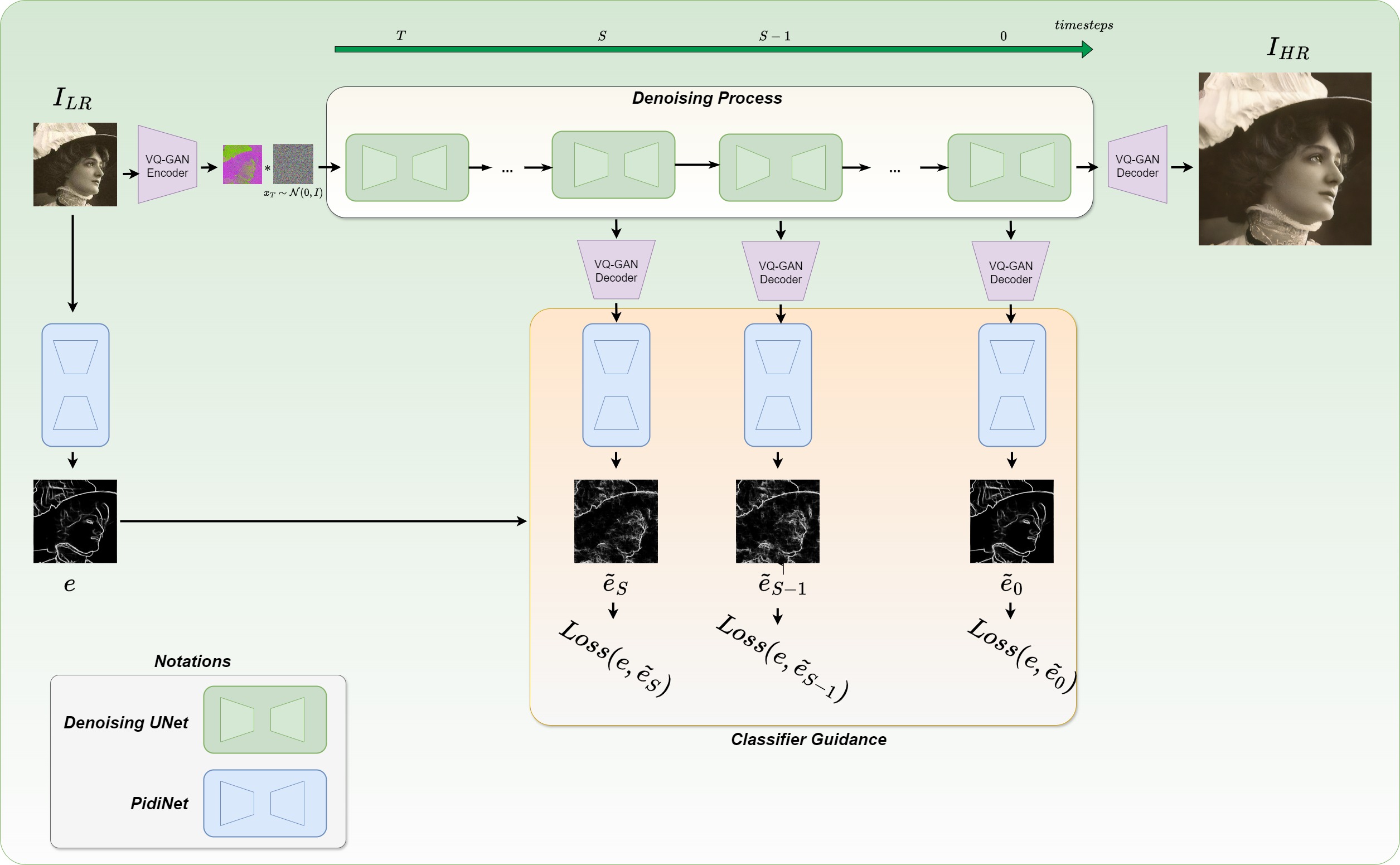

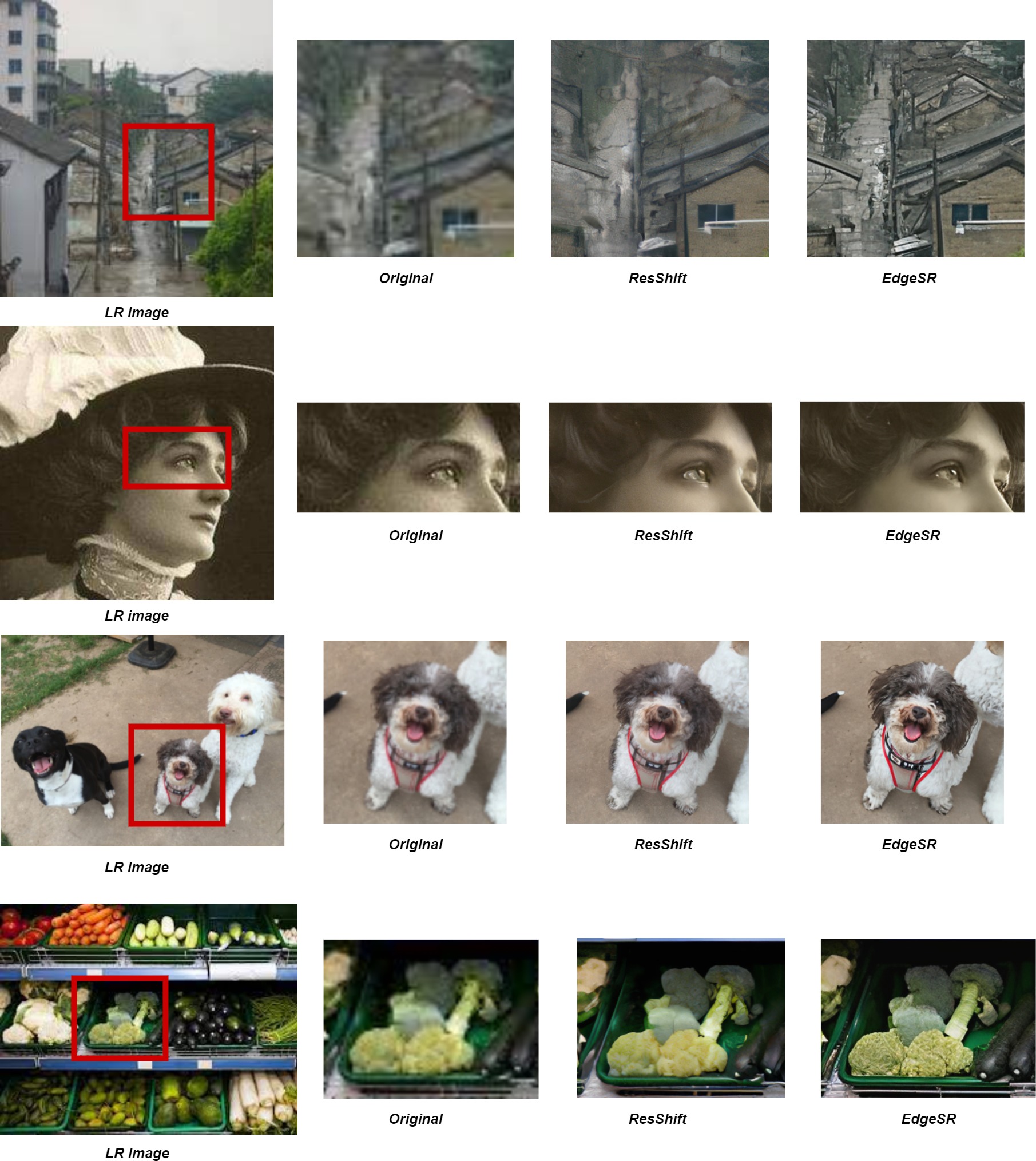

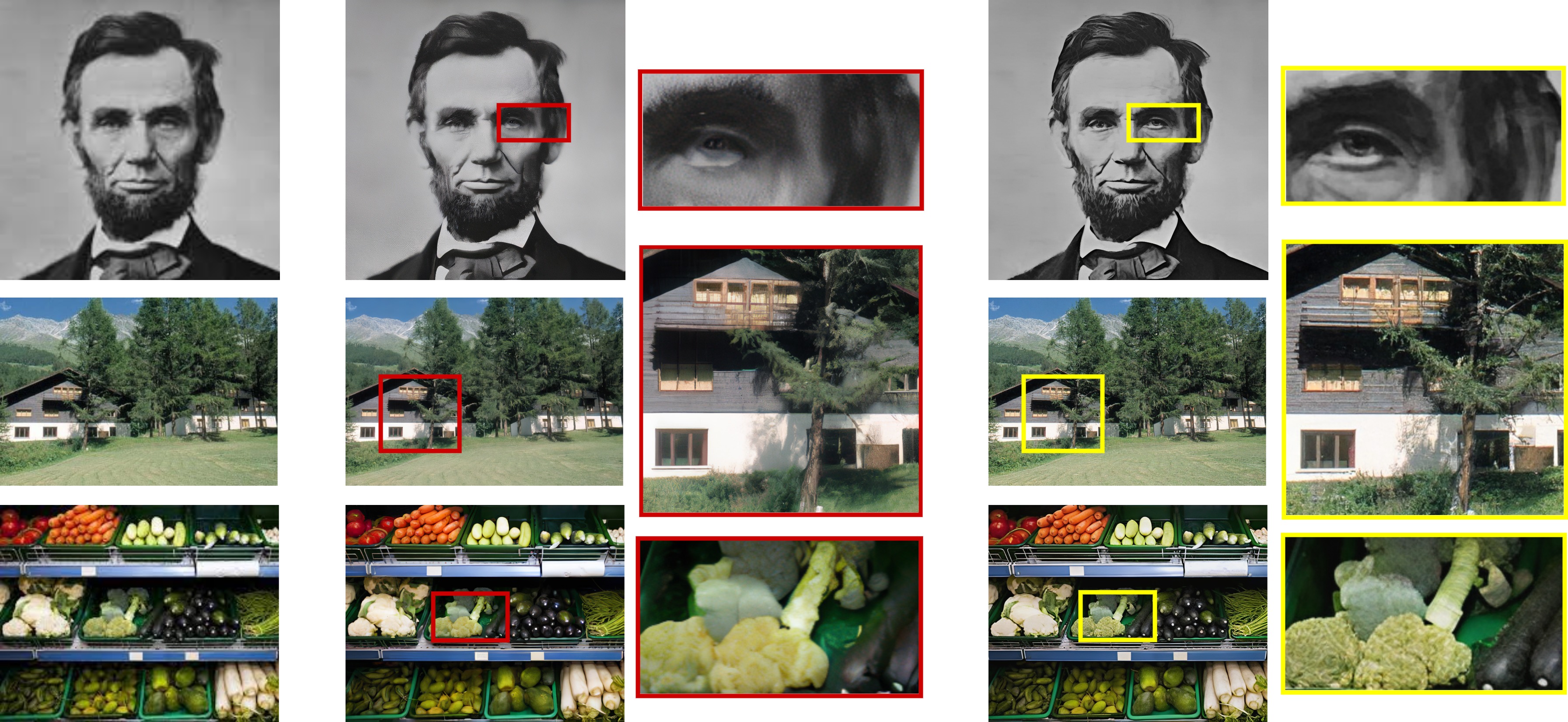

Our method EdgeSR, is a cutting-edge super-resolution technology designed to enhance the clarity and detail of images at the edge level. The displayed frames compare the enhancement capabilities of the ResShift method with those of EdgeSR, demonstrating EdgeSR's superior ability to clarify and define edges in low-resolution images, making the improved edge fidelity distinctly visible.